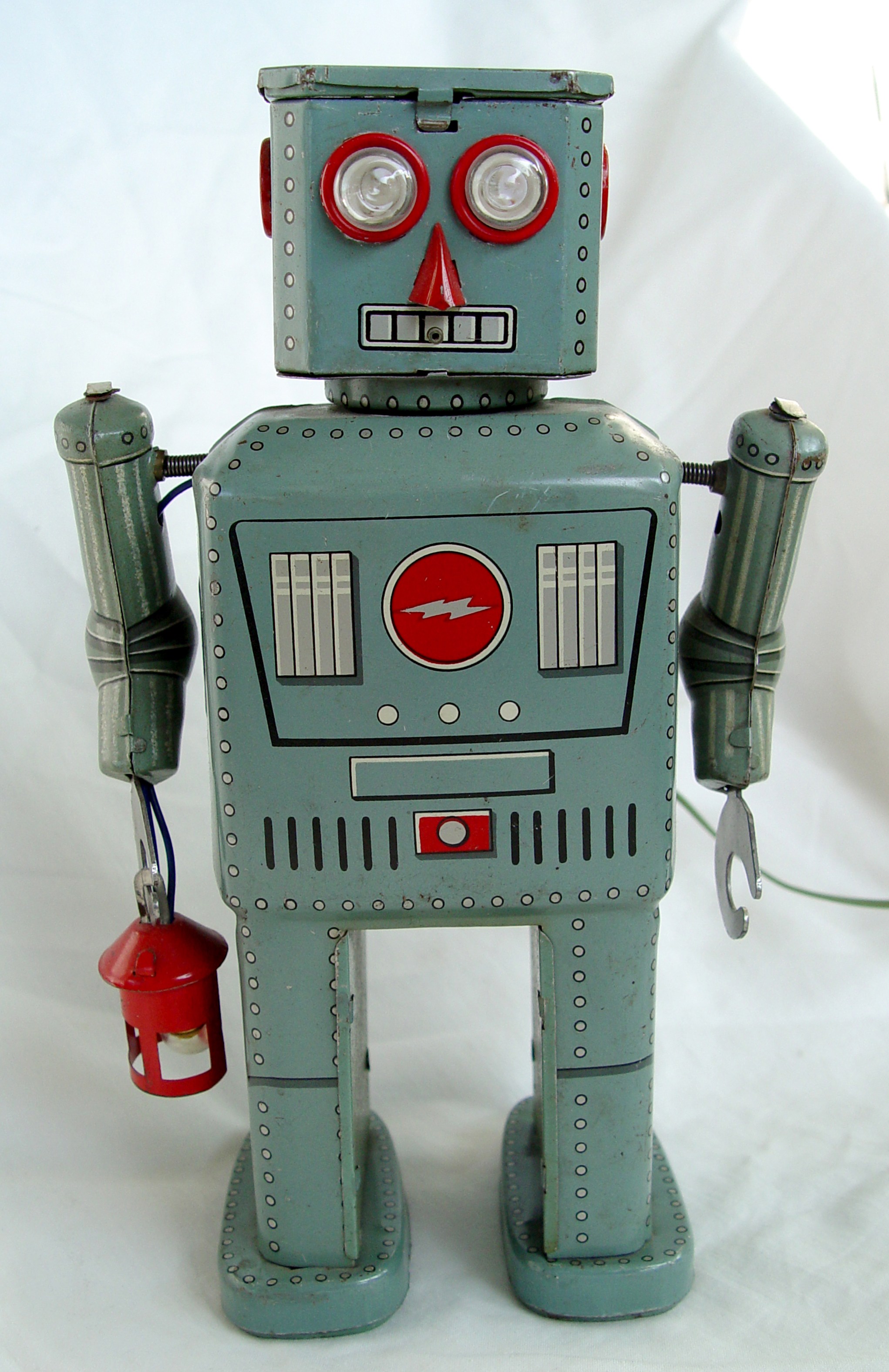

For anyone out there whose greatest fear is the rise of the machines, I’ve got some bad news for you: scientists at UW are hard at work developing more knowledgeable artificial intelligence designed to help robots better learn and obey commands.

The robotics and state estimation lab, headed by Dieter Fox, an associate professor in the computer science and engineering department, recently published a paper detailing how researchers have enhanced and refined the computer’s ability to complete tasks and learn things it doesn’t understand. Where they’ve made big strides, says Fox, is in getting the robots to understand natural language.

“The robot needs to understand all the different ways humans might refer to an object,” says Fox. “If you want to tell the robot to bring the coffee mug you don’t say ‘object 365’ you say ‘coffee mug on the desk.’ We want the robot to understand what people mean in a natural way.”

The team used the Amazon mechanical turk, an online crowd-sourcing marketplace where computer scientists can ask for help with tasks that computers can’t do. They used the turk to develop information on how people naturally refer to everyday objects in household data. Using these findings, Fox’s lab was able to teach the robots how to use identifying qualities like shape, color, material, or name to identify one of the objects against the others.

The idea is that the robot has to correlate natural human grammar with the control program it’s running, which is kind of a big deal. Beyond just fetching Oreos or mugs from counters, these robots could ask and follow directions through an unknown building to a specific room.

“Robots are becoming more and more important,” says Fox, who highlighted how useful they can be to assisting elderly people and manufacturing. “They’re not going to be working with specialists anymore, it’ll be anybody. It’s crucial for robots to…be able to work in a setting that’s much more open ended.”

Fox says the next step is to make the learning tasks harder and less constrained to specific scenarios. He even hopes to incorporate gesturing into a robot’s understanding of what people are asking for.

On an unrelated note, has anyone seen Sarah Connor around?